I’m trying to take this course but I ran into another bug, I think.

First, I got stuck on this error:

. . .

InvalidRequestError: Error code: 400

See this other post: Is this course broken?.

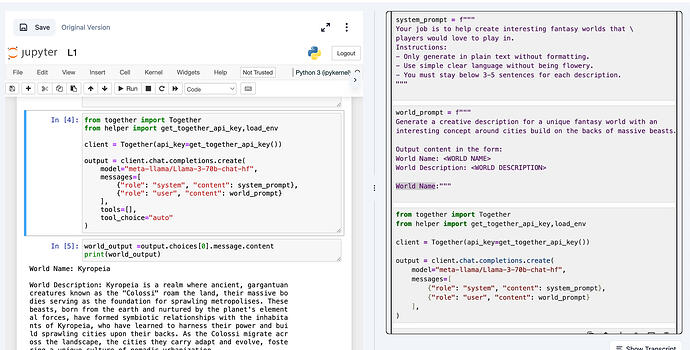

This first error is overcome replacing the definition of the client.chat.completions.create() function with the following:

output = client.chat.completions.create(

model="meta-llama/Llama-3-70b-chat-hf",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": world_prompt}

],

tools=[],

tool_choice="auto"

Note that two new parameters have been added, as tools=[],tool_choice="auto" .

But now, in lesson L2_Interactive AI Applications I get this other error:

. . .

APIError: Error code: 422 - {"message": "Input validation error: `inputs` tokens + `max_new_tokens` must be <= 8193. Given: 6376 `inputs` tokens and 2048 `max_new_tokens`", "type_": "invalid_request_error", "param": null, "code": null}

It seems to me that the limit on the amount of tokens that can be passed to the AI has changed.

Does anyone have an idea how to fix it?

Thanks