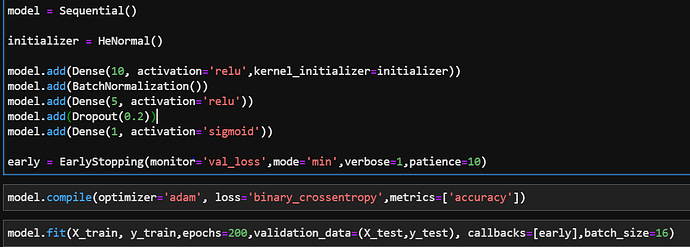

Hi there, I have been reading a debate about the ordering of BN and dropout in hidden layers. As an example below using keras:

As you can see, I have BN just after the input layer but befor activation function then dropout after? I have noticed performance differences when i change the order and cannot see much of a consensus online

Welcome to the forum @nickmuchi!

The question regarding the order of operations of batch normalization and dropout is a good one. As you point out, online research shows there are differing thoughts and approaches with different results.

My advice is to experiment using your particular model and data. Try changing the order of the BN and dropout layers, and even removing one. Compute a performance metric or metrics over something on the order of 10-100 realizations of each model (to account for random weight initializations). Note too the effects each configuration has on overfitting (mainly addressed by the dropout layer) and runtime (addressed by the BN layer).

You may or may not see significant performance differences, but if nothing else, you’ll learn a lot from this exercise! Depending on your available time and motivation, you could put together a thorough analysis and strong contribution to the online deep learning community.

3 Likes

Thanks for the response, will give your suggestions a shot, much appreciated!