AKazak

June 27, 2025, 7:37am

1

Greetings!

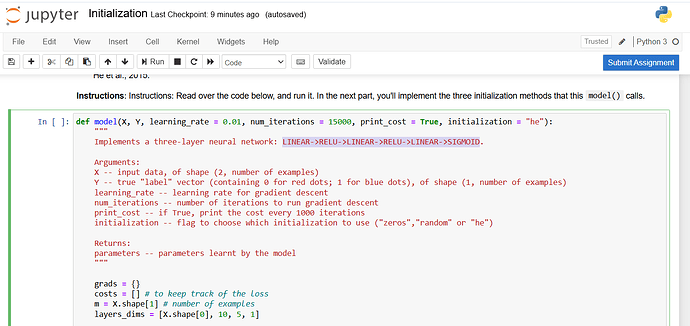

How do I read “LINEAR->RELU->LINEAR->RELU->LINEAR->SIGMOID” correctly to understand the structure of the three-layer neural network?

I do understand LINEAR and RELU, but a three-layer neural network should look like “RELU->RELU->SIGMOID”…

Thank you.

For this assignment, look at def forward_propagation(X, parameters): inside init_utils.py that does this for you.

In the general sense, Dense layer has activation parameter that applies a transformation function after performing affine operation (WX + b).

1 Like

TMosh

June 27, 2025, 7:56am

3

The notation is a little bit misleading. I would have written that as:

[Linear with ReLU activation]->

1 Like

AKazak

June 27, 2025, 8:17am

4

What does Linear mean here?

AKazak

June 27, 2025, 8:22am

5

OK, I see it now.

So the “LINEAR->RELU” layer first does affine operation (WX + b) and then applies ReLu activation function.

In this case I would suggest to slightly change the notation to “(LINEAR->RELU)->(LINEAR->RELU)->(LINEAR->SIGMOID)” or “(LINEAR | RELU) → (LINEAR | RELU) → (LINEAR | SIGMOID)” .

Does it make sense?

2 Likes

TMosh

June 27, 2025, 4:15pm

7

Thanks for your suggestion.