I finished the assignment.

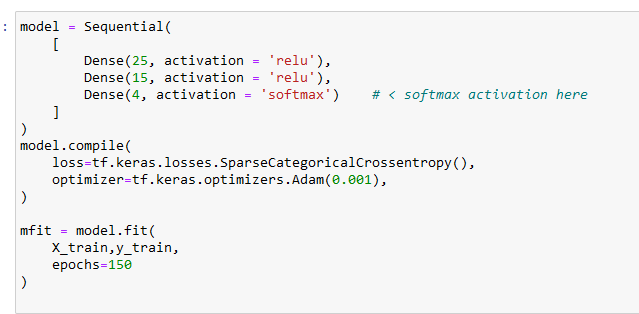

After I went and updated epoch = 150 to see about removing the errors in the model. I noticed this in the loss calculations during the training:

Epoch 72/150

157/157 [==============================] - 0s 2ms/step - loss: 3.3577e-04

Epoch 73/150

157/157 [==============================] - 0s 2ms/step - loss: 3.2813e-04

Epoch 74/150

157/157 [==============================] - 0s 2ms/step - loss: 0.0553

Epoch 75/150

157/157 [==============================] - 0s 2ms/step - loss: 0.0109

Epoch 76/150

157/157 [==============================] - 0s 2ms/step - loss: 0.0030

Epoch 77/150

157/157 [==============================] - 0s 2ms/step - loss: 8.2575e-04

Epoch 78/150

157/157 [==============================] - 0s 2ms/step - loss: 6.1341e-04

Epoch 79/150

157/157 [==============================] - 0s 2ms/step - loss: 5.3338e-04

It happened a couple different times. Final model is ~e-05 and worked well. What I am looking for is insight into why these huge blips occurred at these various iterations. There really isn’t enough in the course on Adam for me to have good insight into this. I reduced learning rate by half and still see blips. Curious to learn more.

Thanks