Hi,

In week 3, in the video about “Model selection and training/cross validation/test sets”, Professor Ng says the following:

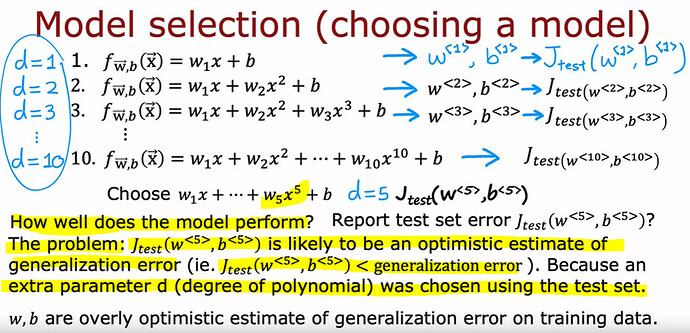

Finally, if you want to report out an estimate of the generalization error of how

well this model will do on new data. You will do so using that third subset of your data,

the test set and you report out Jtest of w4,b4. You notice that throughout this entire procedure, you had fit these parameters using the training set. You then chose the parameter d or chose the degree of polynomial using the cross-validation set and so up until this point, you’ve not fit any parameters, either w or b or d to the test set and that’s why Jtest in this example will be fair estimate of the generalization error of this model thus parameters w4,b4. This gives a better procedure for model selection and it lets you automatically make a decision like what order polynomial to choose for your linear regression model. This model selection procedure also works for choosing among other types of models. For example, choosing a neural network architecture. If you are fitting a model for handwritten digit recognition, you might consider three models like this, maybe even a larger set of models than just me but here are a few different neural networks of small, somewhat larger, and then even larger. To help you decide how many layers do the neural network have and how many hidden units per layer should you have, you can then train all three of these models and end up with parameters w1, b1 for the first model, w2, b2 for the second model, and w3,b3 for the third model. You can then evaluate the neural networks performance using Jcv, using your cross-validation set. Since this is a classification problem, Jcv the most common choice would be to compute this as the fraction of cross-validation examples that the algorithm has misclassified. You would compute this using all three models and then pick the model with the lowest cross validation error. If in this example, this has the lowest cross validation error, you will then pick the second neural network and use parameters trained on this model and finally, if you want to report out an estimate of the generalization error, you then use the test set to estimate how well the neural network that you just chose will do. It’s considered best practice in machine learning that if you have to make decisions about your model, such as fitting parameters or choosing the model architecture, such as neural network architecture or degree of polynomial if you’re fitting a linear regression, to make all those decisions only using your training set and your cross-validation set, and to not look at the test set at all while you’re still making decisions regarding your learning algorithm. It’s only after you’ve come up with one model as your final model to only then evaluate it on the test set and because you haven’t made any decisions using the test set, that ensures that your test set is a fair and not overly optimistic estimate of how well your model will generalize to new data.

My question is: does this also apply when comparing completely different types of learning models, such as comparing between a neural network and a random forest, for example?

If that’s the case, I’d appreciate it if someone could explain to me why that is. The way I see it, I’d use the training set to fit the neural network and the random forest, the cross-validation set to tune the hyper-parameters, architecture, etc, of each model and then I would compare their performance based on the test set. I wouldn’t be using the test set to make any decisions regarding either type of model, I would only use the test set to compare their performances.