Okay, Ben @hogansonb . Please take your time. When you work on it again, just so you have something to cross check your work, I am sharing some printouts with you. You may not need them if you just finish the exercise correctly.

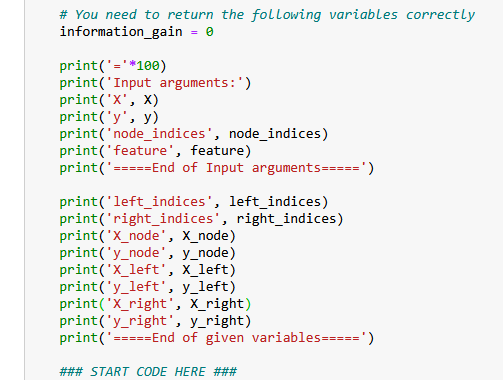

Based upon the code skeleton available to you in the hints section underneath the exercise’s code cell, I have added many prints like below:

The idea here is simple - we print all variables, so you can add them easily as well.

Then, with all the code filled in, I run the testing cell below, and get the following printouts, you may then crosscheck your numbers with mine. The idea is that, you find inconsistent value, you know where to double check.

This may serve as the first aid as I may not be around tomorrow. Note that the printout is long, because there are a couple of test cases, but they are separated by dash lines.

====================================================================================================

Input arguments:

X [[1 1 1]

[1 0 1]

[1 0 0]

[1 0 0]

[1 1 1]

[0 1 1]

[0 0 0]

[1 0 1]

[0 1 0]

[1 0 0]]

y [1 1 0 0 1 0 0 1 1 0]

node_indices [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

feature 0

=====End of Input arguments=====

left_indices [0, 1, 2, 3, 4, 7, 9]

right_indices [5, 6, 8]

X_node [[1 1 1]

[1 0 1]

[1 0 0]

[1 0 0]

[1 1 1]

[0 1 1]

[0 0 0]

[1 0 1]

[0 1 0]

[1 0 0]]

y_node [1 1 0 0 1 0 0 1 1 0]

X_left [[1 1 1]

[1 0 1]

[1 0 0]

[1 0 0]

[1 1 1]

[1 0 1]

[1 0 0]]

y_left [1 1 0 0 1 1 0]

X_right [[0 1 1]

[0 0 0]

[0 1 0]]

y_right [0 0 1]

=====End of given variables=====

node_entropy 1.0

left_entropy 0.9852281360342515

right_entropy 0.9182958340544896

w_left 0.7

w_right 0.3

weighted_entropy 0.965148445440323

information_gain 0.034851554559677034

Information Gain from splitting the root on brown cap: 0.034851554559677034

====================================================================================================

Input arguments:

X [[1 1 1]

[1 0 1]

[1 0 0]

[1 0 0]

[1 1 1]

[0 1 1]

[0 0 0]

[1 0 1]

[0 1 0]

[1 0 0]]

y [1 1 0 0 1 0 0 1 1 0]

node_indices [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

feature 1

=====End of Input arguments=====

left_indices [0, 4, 5, 8]

right_indices [1, 2, 3, 6, 7, 9]

X_node [[1 1 1]

[1 0 1]

[1 0 0]

[1 0 0]

[1 1 1]

[0 1 1]

[0 0 0]

[1 0 1]

[0 1 0]

[1 0 0]]

y_node [1 1 0 0 1 0 0 1 1 0]

X_left [[1 1 1]

[1 1 1]

[0 1 1]

[0 1 0]]

y_left [1 1 0 1]

X_right [[1 0 1]

[1 0 0]

[1 0 0]

[0 0 0]

[1 0 1]

[1 0 0]]

y_right [1 0 0 0 1 0]

=====End of given variables=====

node_entropy 1.0

left_entropy 0.8112781244591328

right_entropy 0.9182958340544896

w_left 0.4

w_right 0.6

weighted_entropy 0.8754887502163469

information_gain 0.12451124978365313

Information Gain from splitting the root on tapering stalk shape: 0.12451124978365313

====================================================================================================

Input arguments:

X [[1 1 1]

[1 0 1]

[1 0 0]

[1 0 0]

[1 1 1]

[0 1 1]

[0 0 0]

[1 0 1]

[0 1 0]

[1 0 0]]

y [1 1 0 0 1 0 0 1 1 0]

node_indices [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

feature 2

=====End of Input arguments=====

left_indices [0, 1, 4, 5, 7]

right_indices [2, 3, 6, 8, 9]

X_node [[1 1 1]

[1 0 1]

[1 0 0]

[1 0 0]

[1 1 1]

[0 1 1]

[0 0 0]

[1 0 1]

[0 1 0]

[1 0 0]]

y_node [1 1 0 0 1 0 0 1 1 0]

X_left [[1 1 1]

[1 0 1]

[1 1 1]

[0 1 1]

[1 0 1]]

y_left [1 1 1 0 1]

X_right [[1 0 0]

[1 0 0]

[0 0 0]

[0 1 0]

[1 0 0]]

y_right [0 0 0 1 0]

=====End of given variables=====

node_entropy 1.0

left_entropy 0.7219280948873623

right_entropy 0.7219280948873623

w_left 0.5

w_right 0.5

weighted_entropy 0.7219280948873623

information_gain 0.2780719051126377

Information Gain from splitting the root on solitary: 0.2780719051126377

====================================================================================================

Input arguments:

X [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y [[0]

[0]

[0]

[0]

[0]]

node_indices [0, 1, 2, 3, 4]

feature 0

=====End of Input arguments=====

left_indices [0, 1, 2]

right_indices [3, 4]

X_node [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y_node [[0]

[0]

[0]

[0]

[0]]

X_left [[1 0]

[1 0]

[1 0]]

y_left [[0]

[0]

[0]]

X_right [[0 0]

[0 1]]

y_right [[0]

[0]]

=====End of given variables=====

node_entropy 0.0

left_entropy 0.0

right_entropy 0.0

w_left 0.6

w_right 0.4

weighted_entropy 0.0

information_gain 0.0

====================================================================================================

Input arguments:

X [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y [[0]

[0]

[0]

[0]

[0]]

node_indices [0, 1, 2, 3, 4]

feature 0

=====End of Input arguments=====

left_indices [0, 1, 2]

right_indices [3, 4]

X_node [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y_node [[0]

[0]

[0]

[0]

[0]]

X_left [[1 0]

[1 0]

[1 0]]

y_left [[0]

[0]

[0]]

X_right [[0 0]

[0 1]]

y_right [[0]

[0]]

=====End of given variables=====

node_entropy 0.0

left_entropy 0.0

right_entropy 0.0

w_left 0.6

w_right 0.4

weighted_entropy 0.0

information_gain 0.0

====================================================================================================

Input arguments:

X [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y [[0]

[1]

[0]

[1]

[0]]

node_indices [0, 1, 2, 3, 4]

feature 0

=====End of Input arguments=====

left_indices [0, 1, 2]

right_indices [3, 4]

X_node [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y_node [[0]

[1]

[0]

[1]

[0]]

X_left [[1 0]

[1 0]

[1 0]]

y_left [[0]

[1]

[0]]

X_right [[0 0]

[0 1]]

y_right [[1]

[0]]

=====End of given variables=====

node_entropy 0.9709505944546686

left_entropy 0.9182958340544896

right_entropy 1.0

w_left 0.6

w_right 0.4

weighted_entropy 0.9509775004326937

information_gain 0.01997309402197489

====================================================================================================

Input arguments:

X [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y [[0]

[1]

[0]

[1]

[0]]

node_indices [0, 1, 2, 3, 4]

feature 1

=====End of Input arguments=====

left_indices [4]

right_indices [0, 1, 2, 3]

X_node [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y_node [[0]

[1]

[0]

[1]

[0]]

X_left [[0 1]]

y_left [[0]]

X_right [[1 0]

[1 0]

[1 0]

[0 0]]

y_right [[0]

[1]

[0]

[1]]

=====End of given variables=====

node_entropy 0.9709505944546686

left_entropy 0.0

right_entropy 1.0

w_left 0.2

w_right 0.8

weighted_entropy 0.8

information_gain 0.17095059445466854

====================================================================================================

Input arguments:

X [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y [[0]

[1]

[0]

[1]

[0]]

node_indices [0, 1, 2, 3]

feature 0

=====End of Input arguments=====

left_indices [0, 1, 2]

right_indices [3]

X_node [[1 0]

[1 0]

[1 0]

[0 0]]

y_node [[0]

[1]

[0]

[1]]

X_left [[1 0]

[1 0]

[1 0]]

y_left [[0]

[1]

[0]]

X_right [[0 0]]

y_right [[1]]

=====End of given variables=====

node_entropy 1.0

left_entropy 0.9182958340544896

right_entropy 0.0

w_left 0.75

w_right 0.25

weighted_entropy 0.6887218755408672

information_gain 0.31127812445913283

====================================================================================================

Input arguments:

X [[1 0]

[1 0]

[1 0]

[0 0]

[0 1]]

y [[0]

[1]

[0]

[1]

[0]]

node_indices [0, 1, 2, 3]

feature 1

=====End of Input arguments=====

left_indices []

right_indices [0, 1, 2, 3]

X_node [[1 0]

[1 0]

[1 0]

[0 0]]

y_node [[0]

[1]

[0]

[1]]

X_left []

y_left []

X_right [[1 0]

[1 0]

[1 0]

[0 0]]

y_right [[0]

[1]

[0]

[1]]

=====End of given variables=====

node_entropy 1.0

left_entropy 0.0

right_entropy 1.0

w_left 0.0

w_right 1.0

weighted_entropy 1.0

information_gain 0.0

All tests passed.