Hi, I am confused about multi-dimensional array representation in reality vs python code.

for example:

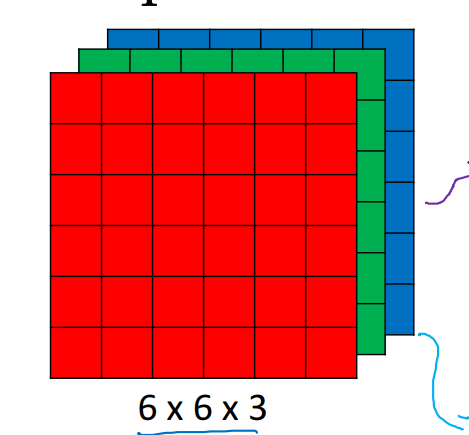

This is called a 6x6x3 image (array)

however, when executing a code like

x= np.ones((6, 6, 3))

print(x)

I get this output

[[[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]]

[[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]]

[[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]]

[[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]]

[[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]]

[[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]]]

This is equivalent to a 6x3x6 image.

And if we add another dimension to represent a dataset of m RGB images of the same size I believe it would be represented as 6x6x3xm but to be defined in python the shape should be (m, 3, 6, 6).

So, In the first assignment

A_prev = np.random.randn(2, 5, 5, 3)

should be representing a dataset with two 5-channeled 5x3 images, right?

if yes, then this line

# Retrieve dimensions from the input shape

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

shouldn’t be

# Retrieve dimensions from the input shape

(m, n_C_prev, n_H_prev, n_W_prev) = A_prev.shape

?

Sorry for the lengthy question.