In function translate I dont know how to declare context " Vectorize the text using the correct vectorizer". Firstly, I used original_sentence that in test function. It’s show not define. Then, I use

english_vectorizer and the error show I tried to define parameters by using global variable.

Did you try searching similar threads?

Also you will find the solution in the assignment only, go back to previous sections where you will find some of the hints.

Is the hints that you mean ? I seached similar thread but still confused but I will explore more a bit.

context = english_vectorizer(texts).to_tensor()

Actually @skinx.learning

until you do not share any detail of where you are getting stuck, others can only assume, so it is better you share a screenshot of the error you were talking about, so we get an idea to direct you.

Regards

DP

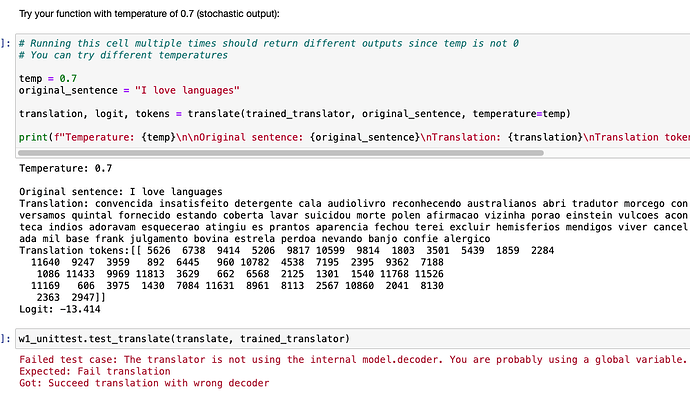

This is my error when I tried to use english_vectorizer to vectorize the text

and This is original_sentence

Do you have any clue ?

if the issue is with the conversion into vector

refer the section just before

Exercise 5 translate (instruction section)

with cell header

PROCESS SENTENCE TO TRANSLATE AND ENCODE

You will find all the hints there

Let me know if you still couldn’t get it.

Vectorize the text using the correct vectorizer

using original_Sentence is giving you the error recall of global variable as you were required to use english_vectorizer

Regards

DP

I edit tf.convert_to_tensor with original sentence but I still dont get it what vectorizer will be use in …(text).to_tensor()

did you read this?

Yes, please. I used “english_vectorizer(text_tensor).to_tensor()” but it’s show the following error.

then that means one need to go back and look into the decoder section as your error clearly mentioned wrong decoder.

Check if for the

The dense layer with logsoftmax activation

you have recalled the unit correctly as instruction mentions

This one should have the same number of units as the size of the vocabulary since you expect it to compute the logits for every possible word in the vocabulary

I re-read and I think I defined it correctly(not sure)

tf.keras.layers.Dense(

units=vocab_size,

activation=tf.nn.log_softmax

)

I notice that show test passed but the value not matched to the expected

I hope you know what is 14 in the 64,14

so basically for context shape your output is matching, but for the next two your unit is incorrect

to understand this you probably need to go once through

GRADED CLASS: CrossAttention where the unit is same as you need for this output.

Also check in the GRADED CELL Decoder

Check how you recall The attention layer as it requires cross-attention.

once you have recalled all the layer you pass the layer to def call

where you need to recall the layers correctly as per you recalled above,

for example the code recall instruction mentions

Pass the embedded input into the pre attention LSTM

but you are suppose to use self.pre_attention_rnn

then Perform cross attention between the context and the output of the LSTM (in that order) which is basically self.attention

But I feel your issue could be with any of the following, kindly do debugging step-wise, go one code at a time.

Regards

DP

Please make use of the search function of the forum. This mistake is very common and there are a lot of posts how to fix it. For example, searching (64, 14, 256) and other keywords would give you many results. Some of them:

- example 1 (mixing up context with target)

- example 2 (embedding the context again in the decoder while you should be embedding the target.)

- example 3 (the use of

context(14) andtarget(15). - example 4 (the units parameter)

- example 5 (

Get the embedding of the input) - example 6 (Paul shows the shapes at each internal step)

- example 7 (Anna points out global vs local variable usage)

- example 8

- plenty of other posts.

In short, you’re probably embedding the context not the target. But I would advise to make use of the forum’s search functionality prior to posting a new thread.

Cheers

Appreciated that ![]() Thanks for your information.

Thanks for your information.

No problem ![]() Just wanted to help you find the solutions faster and more efficient (and it is also good for learning - trying to solve the problem on many angles, instead of getting an answer right away). The general advice would be to:

Just wanted to help you find the solutions faster and more efficient (and it is also good for learning - trying to solve the problem on many angles, instead of getting an answer right away). The general advice would be to:

- search the forum for the problematic function name

- search the forum for the problematic function output (either the error message, or test case output)

- just browse some topics on the specific course and specific week.

I believe majority of mistakes could be found that way. For the other cases, I would advise to post what the error is and what you tried. That way you narrow down suggestions for your particular case.

Cheers