ckim

1

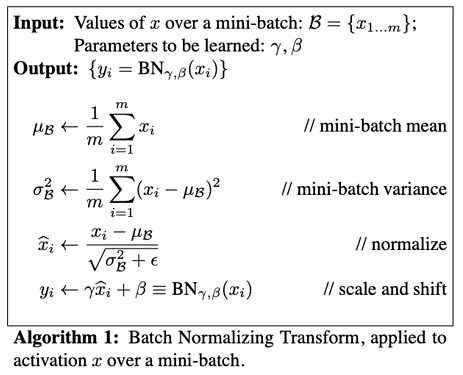

when estimating the variance sigma, batch normalization uses

the mean mu=1/m*sum(x_i)

the variance sigma^2=1/m*sum(x_i-mu)

but the formula for estimating the variance is

sigma^2=1/(m-1)*sum(x_i-mu)

as the variance is biased. Why does batch normalization divide by m and not by m-1 here?

2 Likes

Great question @ckim!

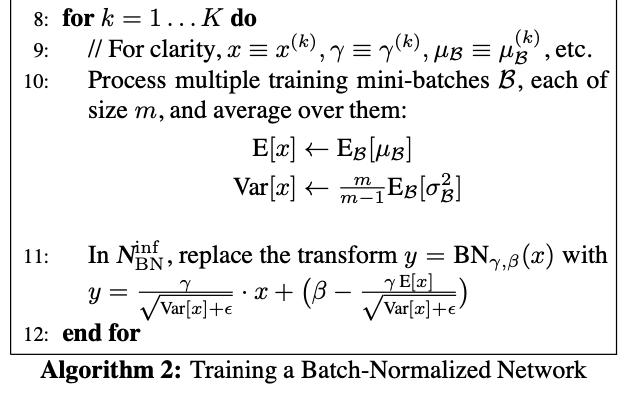

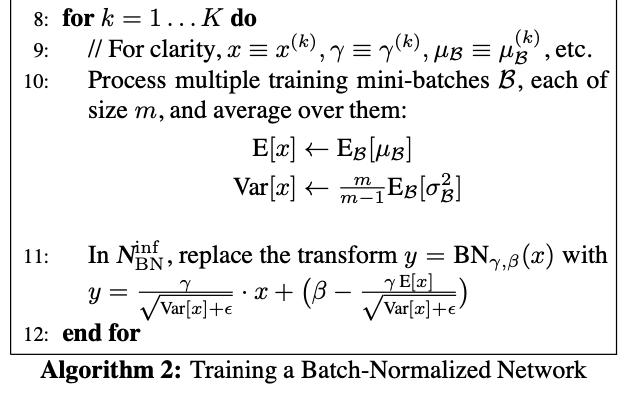

From the batchnorm paper, the unbiased variance estimate is actually used during inference:

For completeness, the batchnorm algorithm is summarized by

and

However, as you have just pointed out, it has been debated before if it is better to use unbiased variance estimates during training as well:

6 Likes