cost = tf.keras.losses.categorical_crossentropy(y_true=tf.transpose(labels), y_pred=tf.transpose(logits),from_logits=True)

tf.reduce_mean(cost)

I had to transpose logits and labels before using tf.keras.losses.categorical_crossentropy, but it not work for me. Can anyone help me?

CCE.call() expects the following:

y_true: Ground truth values. shape = [batch_size, d0, … dN]

y_pred: The predicted values. shape = [batch_size, d0, … dN]

If you don’t have those shapes, suggest investigate why not. ‘I had to transpose…’ is a red flag

However, I believe the problem is that you are mixing the syntax for calling the constructor (making a new instance of CCE) versus computing the loss on your training data.

Check the example for standalone usage [tf.keras.losses.CategoricalCrossentropy | TensorFlow Core v2.7.0]

cce = tf.keras.losses.CategoricalCrossentropy()

loss = cce(y_true, y_pred)

Thanks for your help. I try to transpose labels and logits before calling CCE, but it still have an error.

labels = tf.transpose(labels)

logits = tf.transpose(logits)

cost = tf.keras.losses.categorical_crossentropy(y_true=labels, y_pred=logits,from_logits=True)

tf.reduce_mean(cost)

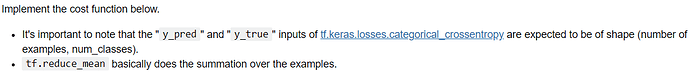

yeahh i can fix the problem. It really need to transpose the logits and labels. Because the shape of tensor that passes to tf.keras.losses.categorical_crossentropy is (num_samples, num_classes). The issue in my code comes from tf.reduce mean. It must be cost = tf.reduce_mean(cost) or cost = tf.reduce_mean(tf.keras.losses.categorical_crossentropy(y_true = labels,y_pred = logits, from_logits=True)). BTW also its argument from_logit=True to tell it the softmax calculation.