Hey, should you need to manually get the derivatives of the sigmoid function?

Like in this one, didn’t get which line of code you figure our sigmoid’s derivatives

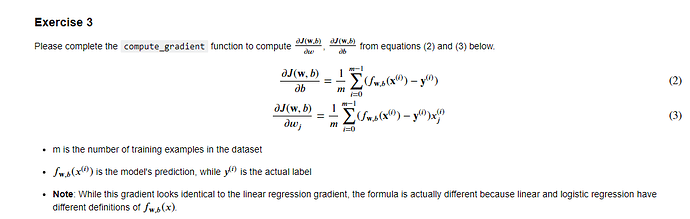

for i in range(m):

f_wb_i = sigmoid(np.dot(X[i],w) + b) #(n,)(n,)=scalar

err_i = f_wb_i - y[i] #scalar

for j in range(n):

dj_dw[j] = dj_dw[j] + err_i * X[i,j] #scalar

dj_db = dj_db + err_i

dj_dw = dj_dw/m #(n,)

dj_db = dj_db/m #scalar