As shown in the picture, if a greedy person wants to train GPT in one day, Does that person need 7400 NVIDIA A100 GPUs?

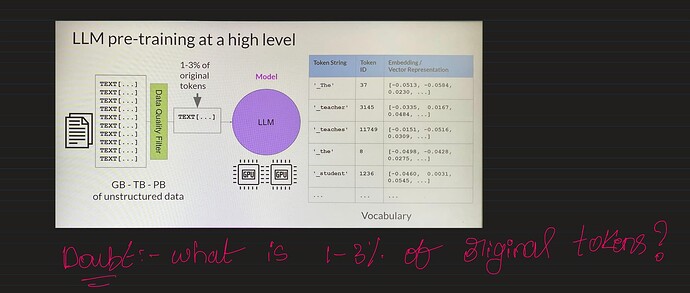

Another doubt regarding the data quality that the instructor mentioned? I didn’t understand why 1-3% of the original token can only be used for training. I want to appreciate your reply. Thank you.

HI @Nitish_Satya_Sai_Ged ,

Regarding the number of A100’s needed to train GPT in 1 day, that’s an interesting idea! I see your math and seems plausible. Is it the actual number? I don’t know. I am sure that there are many other considerations, like number of batches, re-trainings, calibrations, etc. But it would be nice to know that information.

Regarding the second question: where is tath1-3% coming from? Again I can only venture to provide my understanding on this: When you want to train one of these models you need to get a lot of data. For that you scout the internet and gather tons of data. But then you don’t want to use all that data right away. People in charge may want to eliminate websites with inappropriate content (and they decide what is inappropriate for their model), they may want to eliminate websites with certain topics, or certain biases. And, at least from the instructors knowledge and experience, this ends up being around 1-3% of the total amount of raw data originally collected. Is this always the case? is this accurate? I don’t know, but my main take away is: We end up using only a portion of the whole data we initially collect. And I think that this will depend very much on each case.

Hope this sheds some ligth!

Juan

1 Like