I feel a bit embarrassed here, but I’ve looked through most of the surrounding context clues in UNQ C6 and I can’t find anything pointing me to how the different Nones should be programmed to achieve the desired result. There are instructions available, but I need just a bit of extra elaboration to get over the disconnect. Thanks!

Hi @Bradley_M_Messer, welcome to the community!

The Nones are there just as a placeholder so you know the number of lines you need to implement. Now for the instructions:

First, you need to generate a fake image from noise. You can use the generator function gen which was passed as an argument to get_disc_loss. gen takes a random vector as input, which you can generate with get_noise from UNQ_C3.

Then you’ll want the discriminator’s predictions on both the real (passed as the argument real) and fake (from the step above) images. The disc model (also passed as an argument) does exactly that.

Calculate both losses using the criterion function (also passed as an argument). Remember the discriminator wants to predict the fake images as fake (all zeros) and the real images as real (all ones). Set your ground truths accordingly.

Finally, simply average over both losses to get the final loss.

Hope this helps you finish the assignment. Feel free to post further questions on specific steps.

Cheers!

Thanks. And unfortunately this is going to be more handholding than I would like, but it’s really a matter of being able to digest the all the instructions as well as context clues into something meaningful. So here’s what I’ve put as my first step:

noise = gen(get_noise(z_dim, z_dim, device='cpu')).detach()

Does this make sense?

That’s close, but you’re making it more complicated by packing everything into one line. I wrote that portion of the code as two separate lines, which I think makes things a bit easier to read and understand. Of course that is a matter of taste, not correctness.

But speaking of correctness, there is one actual mistake there: you should not “hard-code” the device value to ‘cpu’. That is passed in as an argument to this function, right?

Actually there is one other correctness issue: check the arguments to the get_noise routine. You have specified them as both being the same value, but that’s not my reading of the definition of that API.

Also in terms of aesthetics (not correctness) the output of that line of code is not just “noise”: it’s the actual “fake” images created by the generator, right? The names of the variables don’t really matter. You can call it “fred” or “barney” if you like, but I think it helps understandability to use variable names that reflect the nature of the contents.

Thanks for replying! I’m big on my syntatic sugar and advanced usage of the language (can’t wait till we actually get match-case statements in python 3.10.) and love some of heart and soul in my programming (good music, but software engineering).

I was able to make a lot more connections:

{moderator edit - solution code removed}

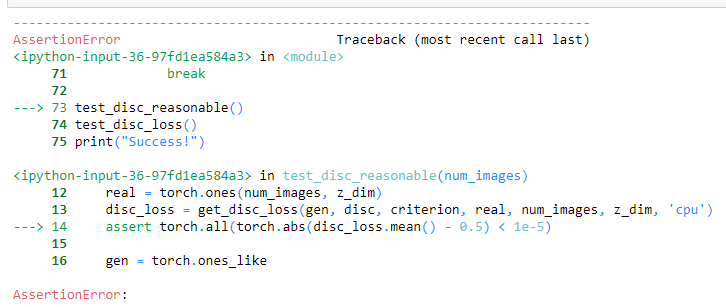

and get the following assertion error telling me something is wrong in my definition somewhere (most likely disc_loss), though that was easy enough and can help clarify the documentation if it’s not sufficient:

Alright, so I got to through the discriminator block by comparing the end of this first week lab with the end of the 2nd week lab and that gave me a lot of good info to work towards.

Currently, I’m getting stuck trying to work through the generator aspect when it comes to generator loss shown below:

{moderator edit - solution code removed}

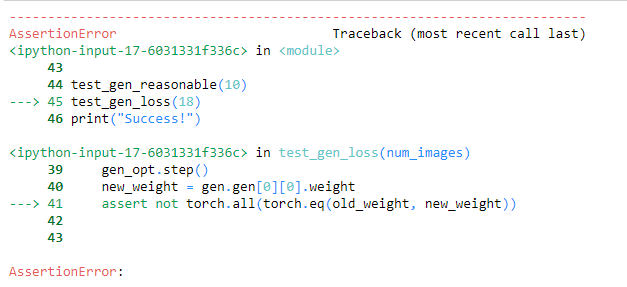

and am hitting an issue on the final assert test of the graded assignment:

I’m not sure what’s fully going on here at the moment, but this tells me that my model isn’t actually learning meaning I probably have something wrong with my generator loss. I’m splitting my attention between a few things at the moment, so I’ll have to come back later to get this fully. Though I might have luck moving onto lab3 and seeing if I get a similar answer like I did from lab 2.

I think it’s simple: the problem is that you detached the generator output, so you don’t get any gradients computed. You want to do that when you are training the discriminator, right? But it doesn’t make sense to do that when you are training the generator …

The situation is fundamentally asymmetric: when you train the discriminator, you don’t need the gradients of the generator. But when you train the generator, the gradients of the generator go through the discriminator by definition, because the gradients are of the cost J, which is the output of the discriminator. So in the latter case, you need to compute the gradients of both the generator and the discriminator, but you only apply the gradients of the generator.

The error is that you should only detach the generator output when training the discriminator. You also detach the discriminator output, so you don’t compute any gradients for the discriminator. Oh, sorry, that is an error, but it won’t cause the actual loss values to be wrong, which is what that assertion is telling you. There’s yet another error: the ground truth for the real images, should be 1, right? They make that point in the docstring, if it wasn’t clear just from the overall intent here.

I’m not sure it’s a correctness issue, but the other thing to notice is that there is no need to detach the generator twice.