Module 4 describes the problem of Hallucinations in LLMs.

The conclusion is:

There’s no perfect fix, but combining RAG, grounding, prompting, citation requirements, and evaluation tools significantly reduces hallucinations and improves reliability in LLM-generated content.

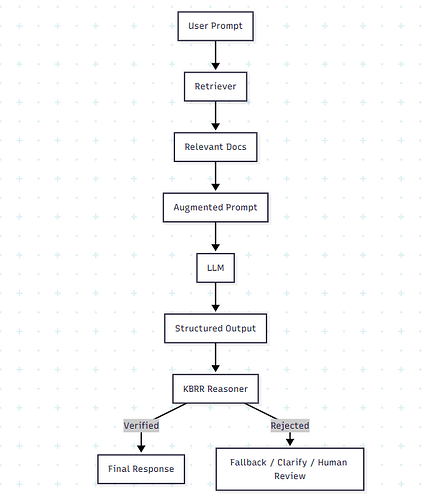

I’m wondering why there is no mention of integrating KBRR (Knowledge Base Representation and Reasoning) from Symbolic AI into the RAG pipeline.

For example, we can add to our RAG process a reasoning engine that evaluates or verifies the LLM’s outputs against a structured KB, instead of only relying on the unstructured text grounding.

graph TD

A[User Prompt] --> B[Retriever]

B --> C[Relevant Docs]

C --> D[Augmented Prompt]

D --> E[LLM]

E --> F[Structured Output]

F --> G[KBRR Reasoner]

G -->|Verified| H[Final Response]

G -->|Rejected| I[Fallback / Clarify / Human Review]

How to apply it (using the module example)

The LLM generates the output in a structured format.

{

"claim": "Student discount is 10%",

"basis": "similar to senior and new customer discounts",

"discounts": {

"senior": "10%",

"new_customer": "10%",

"student": "10%"

}

}

Then we can call a symbolic reasoning module to check if the claim (e.g., student discount = 10%) can be verified in the known knowledge base.

Known facts (KB):

Discount(senior) = 10%

Discount(new_customer) = 10%

The reasoning engine can detect that the LLM invented Discount(student).

Response

- If verified → proceed.

- If unverified → trigger fallback response or human review:

I couldn’t find information about student discounts, but I can confirm we offer 10% for

seniors and new customers.”

So why is KBRR not widely used in RAG systems?

- Is it hard to integrate?

- Does not scale?

- Other

– Javier