Hi, all

when i learn the YOLO loss function , i find a loss function from this tutorial

W index by i and H index by j represent for the amount of cell along the x axis and y axis, A index by k for the amount of anchor boxes

(https://youtu.be/PYpn1GSwWnc?list=PL1u-h-YIOL0sZJsku-vq7cUGbqDEeDK0a&t=1968):

I have a question on the Total loss Function:

-

What the 1MaxIOU<Thresh meaning?

-

And in this loss function one for cell with No obj and one for cell with obj, but how to calculate the loss when a cell have one object for one anchor but other anchor boxes are not match any object?

Yes, that is kind of a creative notation that I’ve never seen in exactly that form before. I think that is just expressing how “non-max suppression” works here. It excludes the values for the objects that are being dropped because they fall below the IOU threshold. Prof Ng covered that concept in the lectures and it’s also covered in the YOLO assignment, although he does not give the full details on how the loss function works.

I think the point is that is what the 1_k^{truth} value takes care of in an analogous way to how the IOU value worked above: it’s a Boolean vector that will be zero for the k values for which there are no recognized objects.

But I have never really looked at the YOLO loss function logic before, so I’m just winging it here. There are lots of great threads on the forum about YOLO. E.g. here’s one with some discussion of the loss function and there are more like this one that explain how YOLO works and is trained.

Thanks for your reply, but you said below:

This description give me the intuition that “non-max suppression” can used in the training process , because the loss function used in training process, but in YOLO assignment, the “non-max suppression” just used after finishing the train process, and used for give the final result with bounding box.

so whether i miss something in the YOLO leaning?

Ok, sorry, I don’t really know the answer here. It definitely looks like in that loss formula they are taking the IOU into account, which would imply that it is part of the training. We have to hope that someone who knows more about YOLO than I do will be able to shed light on this point!

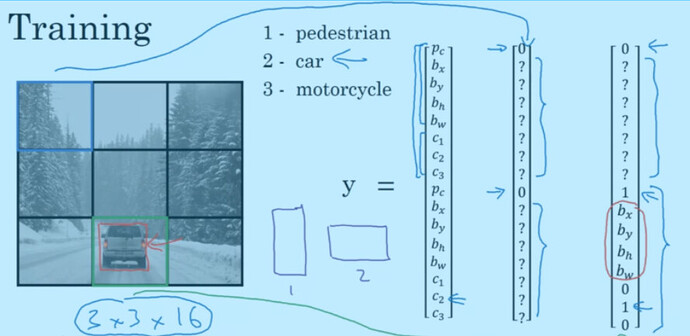

If there is more than one object in the cell, loss function will just use the Loss for cells with objects part. in this condition, can i say that if there is an anchor box without matching any object, we must and only calculate the Pc of label and the Pc of the prediction?, like mentioned in the tutorial of Prof Ng:

But anyway thank you!

I will check the url resource you given me

I would say there are two fundamental organizing concepts of the YOLO loss functions, at least the early versions from the Redmon team. I haven’t looked at the later versions from the ultralytics branch at all.

-

there are separate components for location, shape, and class. Each has its own activation function and nuances, but all are scaled so that they can be meaningfully combined into a single loss value per detector, which is what they called a grid cell + anchor box tuple.

-

clip or weight the loss so that the error contributions from locations that actually have objects in the training data are emphasized, and locations that don’t have objects in the training data are minimized (or completely ignored).

Everything about the loss calculations should be interpreted through that lens… I haven’t looked at the YOLO v2 code for a long while, but remember that IOU is used to assign ground truth bounding boxes to specific anchor boxes. Combine that idea with 2) above, and you can see that the concept is consistent; emphasize error contribution from objects predicted by the ‘right’ detector, deemphasize error contribution from the rest. Here ‘right’ means correct grid cell and the anchor box IOU that is both the max IOU for that object shape and exceeds a scalar threshold. Does that make sense?