Which video is it?

Raymond

Course 3 week 3, state action value function definition

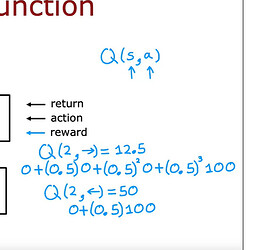

OK! Then I guess you must be talking about this slide:

So the answer is, after you turn right, the OPTIMAL path is then to turn left, left, and left to reach the 100 reward points.

Raymond

ok, so Q always results in the optimal path but just that it has to take a step first to know which is the optimal path after the step correct?

based on this image if I take Q of state 5 to the left I end up with 6.25 which is less optimal using the bellman eqn, so will I still move to the left and continue to do so since the next state, state prime, is 4 and the Q max values traverse to the left till 100 at the state of 4. or how does it use the formula to go from state 4 to then go back to a state 5 where it would have originally been more optimal to traverse right instead?

if possible do u mind showing me some math eqns to explain if it’s too tricky then just an explanation without a diagram would work too. Thanks a bunch

There are 2 keys here:

-

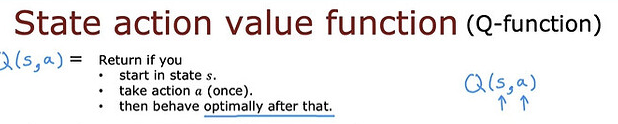

Q(s, a) answers us what the Q is if I am in state s and I take action a. a is a variable of our choice. a is the action we CHOOSE to take. a is chosen regardless it is optimal or not. In simple words, I can choose my first action to be not optimal. But after the first action, the rest of the actions have to be optimal.

-

Follow the three bullet points strictly, because they define Q(s, a).

Raymond

i see so we take action 1 once then behave optimally, so to relate to my example given above, it would go from state 5 to 4 then back to 1. is the correct to say so since it behaves optimally after the 5 to 4?

Yes, after moving from 5 to 4, we start to behave optimally, and to behave optimally, we have to keep going to the left.

Such definition makes a lot of sense right? My Q(s, a) tells me the Q value if I went left, and the Q value if I went right. Given both values, I can choose the best action to take at state s, right? Without these two pieces of information in the first place, how could I decide which way to go?

yup, tks ray!! for the explanation again