Hello,

I wanted to know the intuition behind the discount factor’s value in reinforcement learning (which is usually near 1 like 0.99, 0.995, 0.999) etc.

Sincerely

Dalila

Hi there

The discount factor, 𝛾, is a real value ∈ [0, 1], cares for the rewards agent achieved in the past, present, and future. Let’s explore the two following cases:

- If 𝛾 = 0, the agent cares for his first reward only.

- If 𝛾 = 1, the agent cares for all future rewards.

A reward which you get as soon as possible is just more „worth“ than a reward in the future, especially the longer the horizon is and the uncertainty would be higher.

Note that the discounting concept is also known from finance e.g. to bring future cash flows to a present value considering opportunity cost. This concept is quite similar. Feel free to take a look.

Best

Christian

Hi @Dalila

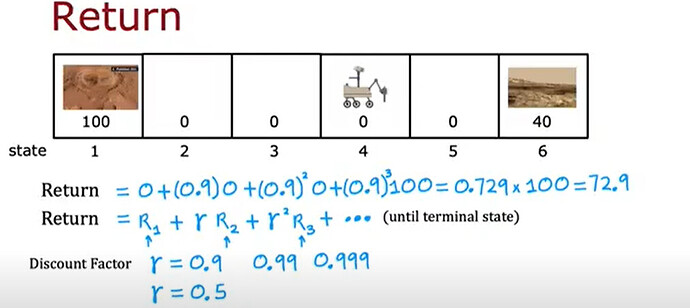

discount factor gamma actually controls If you take an extra step, what is the percentage of benefit that will return to you … Like in the lecture(like the screen shot), where if you do not move from your place, you will benefit from everything around you. Only if you move one step to the left, you will benefit from everything around this step, but on the other hand, you will not be able to get 100 in 100 of the benefit, as let us say that you expended energy in order to move this step, as this energy reduces the benefit, that is, this energy is deducted from the benefit, and so if you move another step, you will benefit from everything that is around this step, but by a percentage Less because you made more effort

the percentage you loss here is a gama discount factor and it increase (redouble)every additional step …in the real life application the gama is 0.9 or 0.99 as it increase the percentage of the benefit you got at every step

Thanks!

Abdelrahman

Without a discount factor, the agent has no incentive to reach the terminal state sooner rather than later - It could go around for infinite steps before reaching the terminal state and the final reward would still be the same. But with a discount factor, the agent realises that the return (sum of all future rewards) is reduced, the more steps it takes to reach the terminal state…because for every extra step taken by the agent the reward gets reduced by a factor of \gamma