I| couldn't| find| the| current| weather| for| San| Francisco|.| Please| check

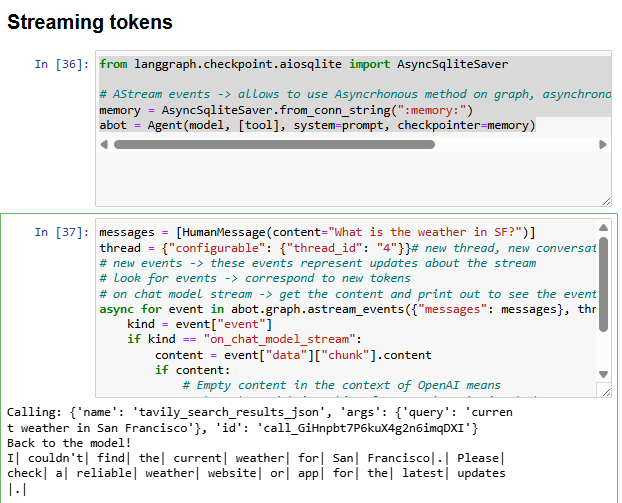

from langgraph.checkpoint.aiosqlite import AsyncSqliteSaver

# AStream events -> allows to use Asyncrhonous method on graph, asynchronous checkpointer for streaming tokens

memory = AsyncSqliteSaver.from_conn_string(":memory:")

abot = Agent(model, [tool], system=prompt, checkpointer=memory)

messages = [HumanMessage(content="What is the weather in SF?")]

thread = {"configurable": {"thread_id": "4"}}# new thread, new conversation

# new events -> these events represent updates about the stream

# look for events -> correspond to new tokens

# on chat model stream -> get the content and print out to see the events

async for event in abot.graph.astream_events({"messages": messages}, thread, version="v1"):

kind = event["event"]

if kind == "on_chat_model_stream":

content = event["data"]["chunk"].content

if content:

# Empty content in the context of OpenAI means

# that the model is asking for a tool to be invoked.

# So we only print non-empty content

print(content, end="|")