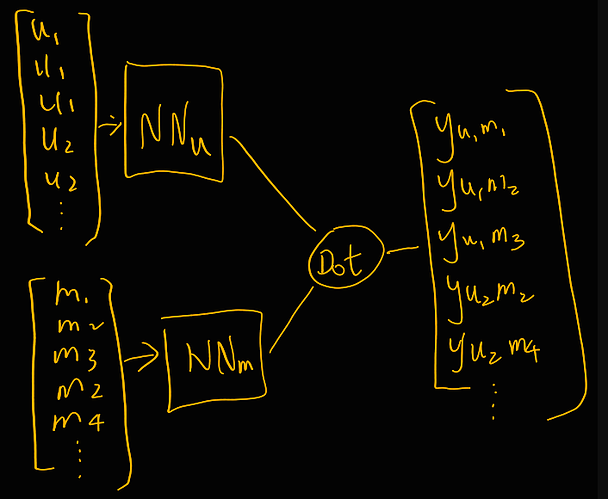

To Whom It May Concern - In the practice lab on content based filtering, I successfully produced predictions for a single new customer and a single existing customer. Is there a way to produce predictions for groups of customers or all customers (e.g. thru vectorization)? Or do I have to loop thru each customer to get ratings predictions for all customers?

If so, that seems like a computational challenge for really large datasets.

Dan

uid = 2

# form a set of user vectors. This is the same vector, transformed and repeated.

user_vecs, y_vecs = get_user_vecs(uid, user_train_unscaled, item_vecs, user_to_genre)

# scale our user and item vectors

suser_vecs = scalerUser.transform(user_vecs)

sitem_vecs = scalerItem.transform(item_vecs)

# make a prediction

y_p = model.predict([suser_vecs[:, u_s:], sitem_vecs[:, i_s:]])

# unscale y prediction

y_pu = scalerTarget.inverse_transform(y_p)