Hi DeepLearning AI Community,

I’m working through the CrewAI RAG tool agent setup as part of ACP: Agent Communication Protocal course, but I’ve hit a roadblock and would appreciate some guidance.

Context:

- I do not have an OpenAI API key, so I am trying to use Hugging Face for both the LLM and the embedding model.

- My goal is to use the DeepSeek RL Distill 70B model from Hugging Face as the LLM, and a BAAI BGE model for embeddings.

My config looks like this:

python

config = {

"llm": {

"provider": "huggingface",

"config": {

"model": "deepseek-ai/deepseek-llm-rl-distilled-70b",

"api_key": HF_key

}

},

"embedding_model": {

"provider": "huggingface",

"config": {

"model": "BAAI/bge-small-en-v1.5",

"api_key": HF_key

}

}

}

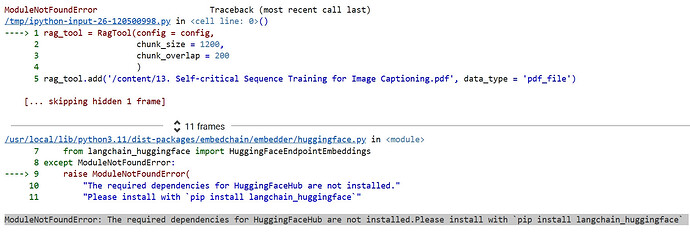

The error I am getting:

text

ModuleNotFoundError: The required dependencies for HuggingFaceHub are not installed. Please install with `pip install langchain_huggingface`

My Doubts & Questions:

- Is my config correct for using Hugging Face models with CrewAI’s RagTool?

- For the DeepSeek LLM, is

"deepseek-ai/deepseek-llm-rl-distilled-70b"the right model string for Hugging Face? - How do I know which embedding models are compatible with the DeepSeek LLM for RAG tasks? Is BAAI/bge-small-en-v1.5 a good choice, or is there a recommended pairing?

- Are there any other dependencies or setup steps I might be missing (besides installing

langchain_huggingface)? - If anyone has a working example of this setup (using Hugging Face for both LLM and embedder in CrewAI RAG), could you please share it?

Error Stage:

- The error occurs immediately when initializing the RagTool with the config above—before any data is loaded or agents are run.

Any advice, clarifications, or pointers to documentation/examples would be greatly appreciated!

Thank you in advance.