Has someone here managed to successfully complete exercises 1 and 2 in the week 2 practice lab for the Machine Learning Coursera course? I don’t understand if they want us for example in exercise 1 to also generate iterations for the cost or is it just writing the basic code for computing cost? The previous labs were not exactly instructive. How do you “loop over training examples”?

Hello @JMG511,

Yes, I have.

The exercise 1 asks us to compute the cost value given x, y, w, and b, so if w = 1 and b = 2, and we have two pairs of (x, y) sample: (3, 4) and (5, 6), then the fucntion is expected to return 0.5 as the value of the cost.

Of course, since the number of samples may vary case by case, we might need a for loop to go over the samples and calculate the individual loss of each samples and sum the losses into the cost.

There is also a code skeleton ready in the hint section right below the exercise cell in which you would find how the loop could be structured.

Cheers,

Raymond

Thanks @rmwkwok yeah I saw the hints and put in the formulae for cost_sum, f_wb and cost, cost_sum and total cost. But I didn’t see anything in the hints about the loop part specifically. I don’t know for sure how to do this part: “In this case, you can iterate over all the examples in x using a for loop and add the cost from each iteration to a variable (cost_sum) initialized outside the loop.”

Please clarify for me what the loop part is because to me it isn’t clear from the hints.

Hello Jason @JMG511,

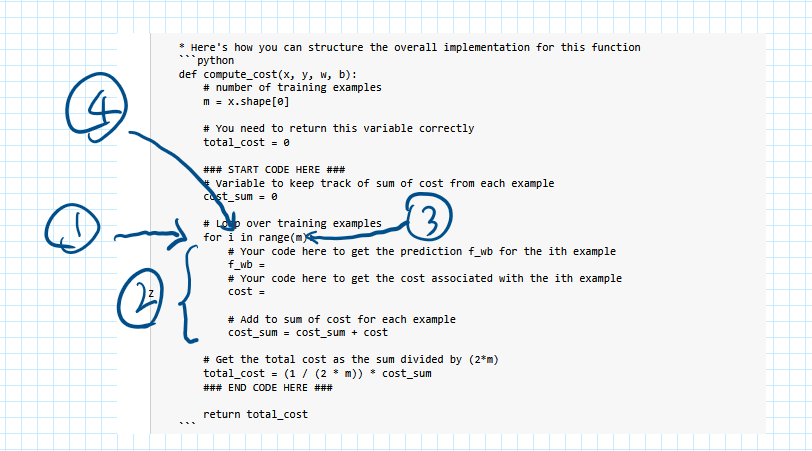

So, you see the for syntax (1) there? That’s the beginning of declaring a for loop - the loop that iterates things. Anything that is further indented (2) is being looped, so in this case, there will be cost calculated and then accumulated to cost_sum in every iteration. The range(m) (3) decides that it will run the loop m times but for how range works, I will leave it to you to google. Since m is, as mentioned a few lines up, the number of training examples, the loop runs as many times as the examples we have, and this does the following:

Finally, (4) i is the iterating index which you may use to index each of every training examples one by one. Inside the loop, you index an example with i, and you calculate the loss of that example.

Cheers,

Raymond

@rmwkwok Well this is what the hint explicitly says, but what I was asking is I don’t know the code for these actions like looping. The other definitions like the f_wb and cost I know.The teacher didn’t teach the coding aspect much or at all.

I see. Well, to loop a line of code, you put it there indented like (2), and that’s all.

For example, if you want to print “Hello world” 5 times, you do the following:

for i in range(5):

print("Hello world")

Why does it print five times? Because the printing code is inside the for loop. Why is it inside? Because the line is indented one level further.

The following will print it ten times:

for i in range(5):

print("Hello world")

print("Hello world")

The following six times:

for i in range(5):

print("Hello world")

print("Hello world")

You are right that this course does not teach coding because it’s about machine learning. We do use code to do machine learning, but coding itself deserves its own dedicated course.

The ML courses assume at least the fundamentals of Python programming skills.

It might be useful to attend an Intro to Python course. There are many of them for free online.

Okay but so in this case the number of iterations would be what? I just know the cost has to be computed at each iteration. It isn’t clear to me what they want.

The compute cost function doesn’t care about the number of iterations. It just computes the cost once, using the current set of parameters over the whole dataset.

When you want to find the minimum cost by computing the gradients and modifying the weights, that process is where the number of iterations comes into play.

That comes later in the assignment.

Note that in the compute_cost() function, the value ‘m’ represent the number of examples in the dataset.

It has nothing to do with the number of iterations used to find the optimum solution.

Ok right. I could be wrong and don’t have it in front of me now but I don’t remember there being a given number of training examples. Is it just asking to compute cost given m examples?

The training set is provided via the ‘X’ function parameter.

‘m’ is computed from the shape of ‘X’.

Hey JMG511 — I remember being in that exact spot when I went through the course. Week 2’s lab can feel a bit unclear at first. For exercise 1, the main goal is to implement the cost function correctly; the iterations for cost come in later when you combine it with gradient descent. And when they say “loop over training examples,” it just means going through each data point one by one inside your function (rather than using vectorized NumPy operations).

If it helps, I’ve been keeping my own notes and worked examples while going through these same labs and expanding on them. I turned them into a living repo on GitHub: {link deleted}. It breaks down linear regression step by step with derivations, cost functions, and gradient descent — basically the same concepts you’re practicing in week 2.

Might give you a bit more clarity while you work through the lab, and I’d be curious if it helps fill in the gaps.

Okay thanks yes I’m going to take you up on that! Will take a look later. I put in some code which may be correct but when I run the cells, after answering exercise, nothing happens.

Sorry, but I had to delete the reference to your repo, because it contains solutions to the programming assignments (within your pdf files).

Sharing your solutions is not allowed by the Code of Conduct.