Context:

In Class Notes “C1_W2.pdf”, Page 11, Gradient Descent Algorithm defines a variable alpha.

w = w - * dJ(w)/dw

Issue:

- In Exercise 6 (Optimize), there is no ALPHA

variable defined. - Different Attempts to update w,b parameters fail. [(2) Update the parameters using gradient descent rule for w and b.) ]

Some options have been tried, but FAIL even though Matrix dimension is correct:

w = w - dw etc…

Please assist triaging the issue. Thanks.

Error Observed:

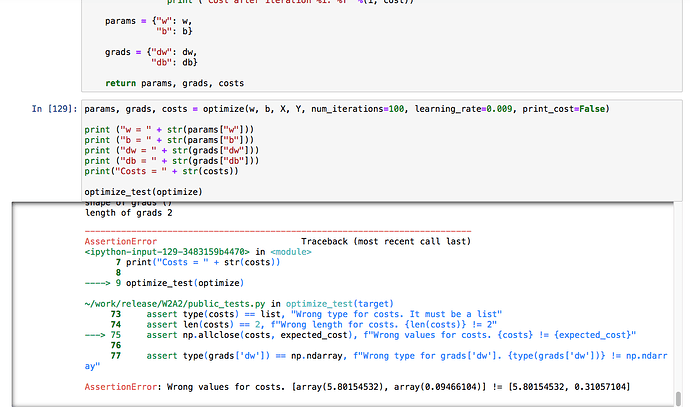

AssertionError Traceback (most recent call last)

in

7 print("Costs = " + str(costs))

8

----> 9 optimize_test(optimize)

~/work/release/W2A2/public_tests.py in optimize_test(target)

73 assert type(costs) == list, “Wrong type for costs. It must be a list”

74 assert len(costs) == 2, f"Wrong length for costs. {len(costs)} != 2"

—> 75 assert np.allclose(costs, expected_cost), f"Wrong values for costs. {costs} != {expected_cost}"

76

77 assert type(grads[‘dw’]) == np.ndarray, f"Wrong type for grads[‘dw’]. {type(grads[‘dw’])} != np.ndarray"

AssertionError: Wrong values for costs. [array(5.80154532), array(0.09466104)] != [5.80154532, 0.31057104]