During the lecture in the Negative Sampling to find the Word Embeddings, Andrew called it as a multiple binary classification problems rather than single softmax problem.

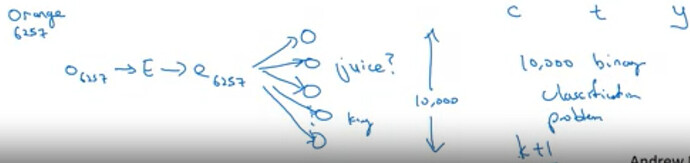

As per Andrew the above image represents Training dataset. But for me it seems like it is single binary problem i.e., given the “Context” and “Word” our target is either 1 or 0

I realized I was right after seeing Andrew righting formula that was mentioned above.

But while drawing its neural network representation, Andrew said it was multiple binary class problem but the formula written above says otherwise.

So is the formula correct or neural network representation part posted below which doesn’t make any sense to me?