Why when cross validation set is introduced, the regularization term is removed from training error?

Because we want to measure how well the model fits the data, not how “small” the weights are. The regularization term is not part of the prediction error, it’s a penalty to control overfitting during training, not something we care about when judging performance on data. In modern frameworks, regularization is typically handled by the optimizer (via weight decay), so it’s not even part of the cost function.

Then in what situation would you include the regularization term into the cost function of the training set and when would you not?

Regularization is only used in training.

Let me extend @TMosh Tom’s answer. Let’s say we want to use L_2 regularization during training. And we also want to monitor the cost function. During training we see that our cost function with regularization term decreases, but in such a case it’s hard to tell if our model is really learning, or we are just decreasing the L_2 norm of the weights. This is why, in practice, we may want to track both the regularized cost, which is optimized by the training algorithm, and the unregularized loss (i.e., prediction error), which tells us how well the model fits the data. We include the regularization term in the cost function when we’re computing the function that the optimizer is minimizing using automatic differentiation, or when we want to monitor whether the optimizer is making progress on the full objective. However, it’s not very convenient to maintain two separate objective functions. This is why modern frameworks handle this cleanly by applying regularization inside the optimizer (via kernel_regularizer in TensorFlow or weight_decay in PyTorch), while keeping the loss function itself purely focused on prediction error.

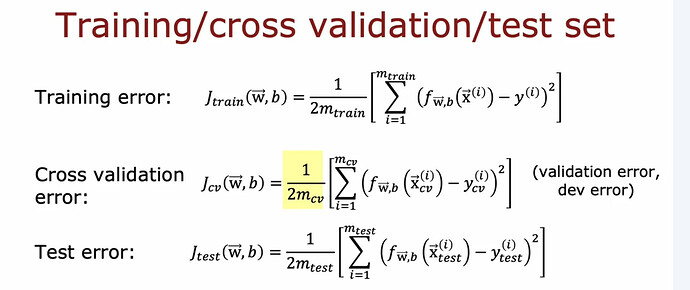

Hi Conscell, I’m a bit confused of this slide in week3. Here the cost function in the red box is of training set right? So here it did include regularization term. So can I understand in this way that we include regularization term in training set error when we fit the parameter but we don’t include it when we calculate the actual training set error when we evaluate the model performance?

Hi Conscell, I wonder perhaps my confusion is I’m mixing cost function with training set error. The cost function is the whole thing of J(w,b) which include regularization term in order to fit the parameters w and b, whereas training set error is only the left part in the red box which doesn’t include the regularization term. Is my understanding correct?

Hi @flyunicorn ,

Please revisit the video lectures again and listen carefully what Prof. Ng is trying to address in week3 - what could affect the model and how to address the bias and variance problem of your algorithm.

You can treat λ as a switch, dialling it up or down would give a different effect to the algorithm. The cost function can have a λ term to find the W and b. In order to be sure your algorithm is fit for purpose, we need to look at the training error and the cross validation error. If there is bias or variance problem, we can use λ to figure out what value of the λ would give us the W & b such that there is no bias or variance. Of course, the training data and algorithm also need to look at as well.

The lambda value adds cost.

Our goal is to minimize the cost.

So the effect of lambda during training is to encourage learning smaller weight and bias values.

After training has converged, now you’re comparing Jtrain, Jcv, and Jtest, to measure the model’s performance. Then you should set lambda to 0 when you call the cost function, so that you don’t get any additional cost based on the magnitude of the weight and bias values.

Hi @flyunicorn,

Yes, on the first slide, the cost function in the red box (\displaystyle \min_{\vec w, b} J(\vec w, b)) does include the regularization term. The equation explicitly states that the goal is to minimize it.

On the second slide, the equation in the red box (\displaystyle {1 \over 2m} \sum_{i=1}^m (f_{\vec w, b} (\vec x^{(i)}) - y^{(i)})^2) represents the prediction error, and as you can see, it doesn’t include the regularization term.

As @TMosh said, we exclude the regularization term when evaluating the model’s performance.

So the term cost function and training set error is the same?

Training set error is error specific to training example (feature) or subset of the dataset where as cost function measures the discrepancy between the predicted value to the actual value.

Training set error and cost function are not same but closely related as higher training set error can lead to higher cost function.

because cost function measures models performance on training data (and not on cv data), regularisation term or lambda basically helps in normalisation of data distribution in case highly skewed data which could cause high training error rate or high cost function.

Perhaps the confusion between these two terminology could have raised because cost function is also called as error function.

Here is another discussion which explains the use of lambda value to the cost function.

@flyunicorn,

In this context, by “prediction error” I mean the mean squared error (MSE), which is the cost function excluding the regularization term.

No.

The cost function is a piece of code that will compute the cost given a set of data and a set of weights, and optionally a regularization lambda value.

The training set error is computed with the cost function, using the training set.