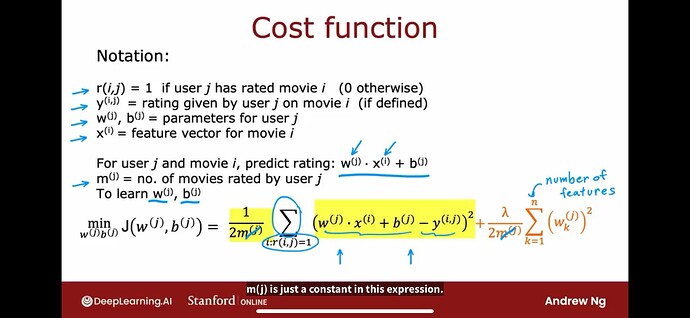

Why only “m” (count of valid data) in Collaborative Filtering Cost function can be deleted? While as “m” stays in Linear Regression and Logistic Regression’s Cost functions? (Can they also be deleted?)

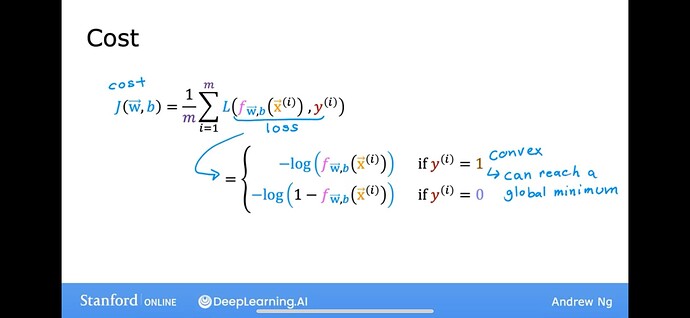

Since ‘m’ is a constant, then 1/m is also a constant. All it does is scale the magnitude of the cost.

But “m” in Linear Regression and Logistic Regression’s Cost Function is also a constant, why it stays? Can it be removed as well?

Yes. But that’s not the traditional method.

Different techniques were invented by different people, with different ideas.

There is very little standardization across methods.

Hello @Jinyan_Liu,

If we keep the m there, we actually cannot move it out of the summation sign. This means we will have to do as many divisions as the number of user when computing the cost (and consequently the gradients). Division is an expensive operation.

On the other hand, since m is a constant with respect to each user, we can consider to “absorb” the m into the w, b, and y by, for example, rewriting b^{(j)} as \frac{b^{(j)}}{\sqrt{m^{(j)}}}. However, in this case, y will be scaled down. This means that we are scaling down more on the rating given by users who rated more. As the designer of this algorithm, do we want that?

There are some other way to consider this but I will leave that as an exercise to you for the future ![]() As for the m in Linear Regression, it is also an exercise for you to think/experiment about it. Tom has shedded light on it. Always try to think from both sides - keep and remove, and see how you will balance those factors.

As for the m in Linear Regression, it is also an exercise for you to think/experiment about it. Tom has shedded light on it. Always try to think from both sides - keep and remove, and see how you will balance those factors. ![]()

Good luck!

Raymond

Another benefit to leaving 1/m in the cost function is if you are going to vary the size of the training set (i.e. if m isn’t a constant), but you want to compare improvements in the cost vs. the training set size (i.e. using a learning curve).

Typically this isn’t done in collaborative filtering, so there’s no significant in keeping it.

Thanks @rmwkwok ! ![]()

Although there are other pros and cons for me to find in future, but your explanation helps a lot! I didn’t think carefully: m is a constant per user! If leave it there, it can complicate the cost function and also the algorithm, without gaining better performance. ![]()

Thank you! ![]()

Thanks @TMosh !

That’s true! ![]()

Basically removing the “Mean” from “Mean Squared Error”. Therefore cost function values will be large and I am not sure what the implications of that will be on the learning rate or any other optimizer we use. I guess we will have to set the learning rate to a small value.

Also, like @TMosh mentioned we can’t compare cost function values when the size of training data changes unless we find out MSE separately.

The optimizers don’t use the cost value for anything except monitoring. The magnitude doesn’t matter much.

The key factor for an optimizer is the gradients.

Good point, @tinted. Give and take. I described a take, you mentioned a give, and both are important. However, if your concern is going to be a negative one throughout the development cycle of a recommender, we could test if adding a scaling factor \frac{1}{\sum_j {m^{(j)}}} would make a positive difference, couldn’t we? ![]()

Cheers,

Raymond

yep, the algorithm will work since the direction in which the new w, b update is the main working principle of the optimizer. But, the learning rate will have to decrease because of the increase in magnitude of cost function.

But, if we use optimizers like “Adam” which changes the learning rate dynamically then maybe this is not a problem at all.

Edit: My bad on using the picture I used, that red underline was from prof. Andrew, I just used that image as a reference to the gradient equations, nothing else.

Also, when I say the learning rate has to decrease, I meant in relation to the learning rate if we have that 1/m.