I’ve been meaning to use this course in a class I’m teaching soon, but ran into the errors that have been documented in previous threads. Since the answer was “the model used in the course is deprecated”, I tried to replace it with other models from the together.ai website. However, no matter which I try, I always get “error 403: using X is invalid” (where X is the model name). What’s more, trying to list models with client.models.list() also throws an error. Is there any way I can adjust the course to be workable before the devs patch it up?

(just a community volunteer)

I’ll try to take a look at it, as I will have a few free days available soon.

For reference, can you post some screen capture images of the error messages you’re seeing when you try other models?

Thanks, here is the whole trace when I just replace meta-llama/Llama-3-70b-chat-hf with openai/gpt-oss-20b (for example in the 4th cell of L2).

InvalidRequestError Traceback (most recent call last)

Cell In[4], line 1

----> 1 model_output = client.chat.completions.create(

2 model=“openai/gpt-oss-20b”,

3 temperature=1.0,

4 messages=[

5 {“role”: “system”, “content”: system_prompt},

6 {“role”: “user”, “content”: world_info + ‘\nYour Start:’}

7 ],

8 )

File /usr/local/lib/python3.11/site-packages/together/resources/chat/completions.py:136, in ChatCompletions.create(self, messages, model, max_tokens, stop, temperature, top_p, top_k, repetition_penalty, presence_penalty, frequency_penalty, min_p, logit_bias, stream, logprobs, echo, n, safety_model, response_format, tools, tool_choice)

109 requestor = api_requestor.APIRequestor(

110 client=self._client,

111 )

113 parameter_payload = ChatCompletionRequest(

114 model=model,

115 messages=messages,

(…)

133 tool_choice=tool_choice,

134 ).model_dump()

→ 136 response, _, _ = requestor.request(

137 options=TogetherRequest(

138 method=“POST”,

139 url=“chat/completions”,

140 params=parameter_payload,

141 ),

142 stream=stream,

143 )

145 if stream:

146 # must be an iterator

147 assert not isinstance(response, TogetherResponse)

File /usr/local/lib/python3.11/site-packages/together/abstract/api_requestor.py:249, in APIRequestor.request(self, options, stream, remaining_retries, request_timeout)

231 def request(

232 self,

233 options: TogetherRequest,

(…)

240 str | None,

241 ]:

242 result = self.request_raw(

243 options=options,

244 remaining_retries=remaining_retries or self.retries,

245 stream=stream,

246 request_timeout=request_timeout,

247 )

→ 249 resp, got_stream = self._interpret_response(result, stream)

250 return resp, got_stream, self.api_key

File /usr/local/lib/python3.11/site-packages/together/abstract/api_requestor.py:620, in APIRequestor._interpret_response(self, result, stream)

612 return (

613 self._interpret_response_line(

614 line, result.status_code, result.headers, stream=True

615 )

616 for line in parse_stream(result.iter_lines())

617 ), True

618 else:

619 return (

→ 620 self._interpret_response_line(

621 result.content.decode(“utf-8”),

622 result.status_code,

623 result.headers,

624 stream=False,

625 ),

626 False,

627 )

File /usr/local/lib/python3.11/site-packages/together/abstract/api_requestor.py:689, in APIRequestor._interpret_response_line(self, rbody, rcode, rheaders, stream)

687 # Handle streaming errors

688 if not 200 <= rcode < 300:

→ 689 raise self.handle_error_response(resp, rcode, stream_error=stream)

690 return resp

InvalidRequestError: Error code: 403 - {“message”: “using openai/gpt-oss-20b is invalid”, “type_”: null, “param”: null, “code”: null}

@lesly.zerna, maybe you can investigate this issue? It’s outside of my skillset.

Thank you for reporting! I’ve seen some models have been deprecated! Let me go over this course and talk with the engineering team!

Thank you for reporting! ^^

Thank you for reporting! Please, take into account that this issue is related to updates in the Together server, so we need to add explicitly extra parameters when setting the model output.

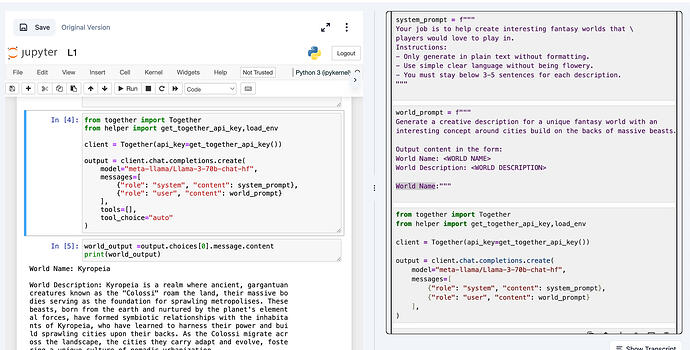

Here, I just ran all Lesson 1 notebook with this code updated and all works fine!

In the upcoming weeks, I’ll be updating the notebooks and that will be reflected in the platform! By now, I’m attaching here all L1 with the corrected code!

Thank you again for reporting this!

L1.ipynb (19.1 KB)