I think the confusion lies in an incorrect assumption about how indexing works. You are indexing at the “outer” level here. If one of the objects at one of the earlier positions is itself a compound object with multiple layers, that is irrelevant. From the POV of the outer level of indexing, it counts as one element. Or to put this another way, I can have a “list of lists”, right? Which list is the 3rd list in the list of lists is independent of how many elements the first list in the list of lists has. Why is that hard to understand?

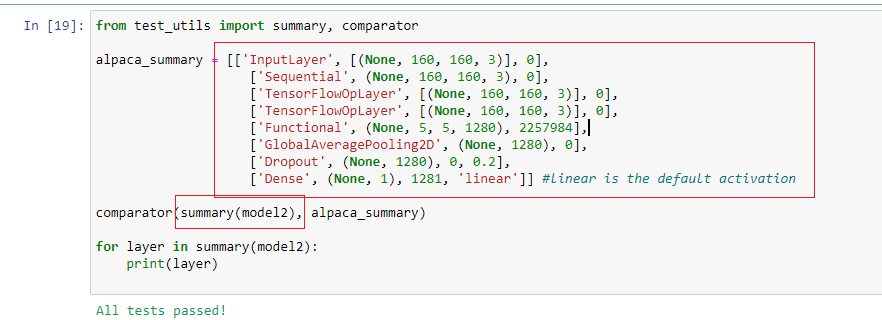

The information is all there in this listing in your earlier post:

position 0 'InputLayer', [(None, 160, 160, 3)], 0],

position 1 ['Sequential', (None, 160, 160, 3), 0],

position 2 ['TensorFlowOpLayer', [(None, 160, 160, 3)], 0],

position 3 ['TensorFlowOpLayer', [(None, 160, 160, 3)], 0],

position 4 ['Functional', (None, 5, 5, 1280), 2257984],

position 5 ['GlobalAveragePooling2D', (None, 1280), 0],

position 6 ['Dropout', (None, 1280), 0, 0.2],

position -1 ['Dense', (None, 1), 1281, 'linear']] #linear is the default activation

So the 5th element (index 4) is this one:

[‘Functional’, (None, 5, 5, 1280), 2257984],

That is a Keras Functional object which (as you mentioned) itself has 155 layers. It’s the actual pretrained MobilNet model that was imported and then included at that point in the alpaca_model logic.

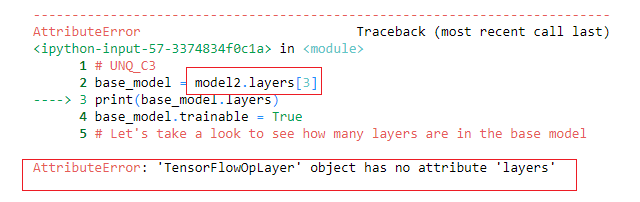

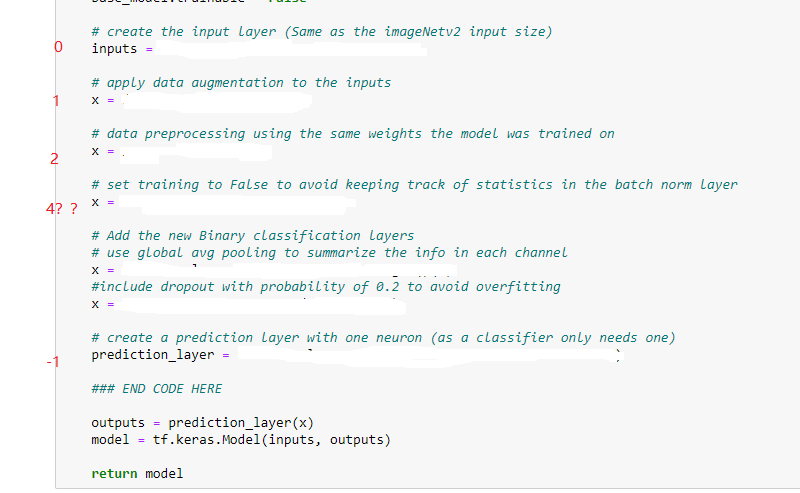

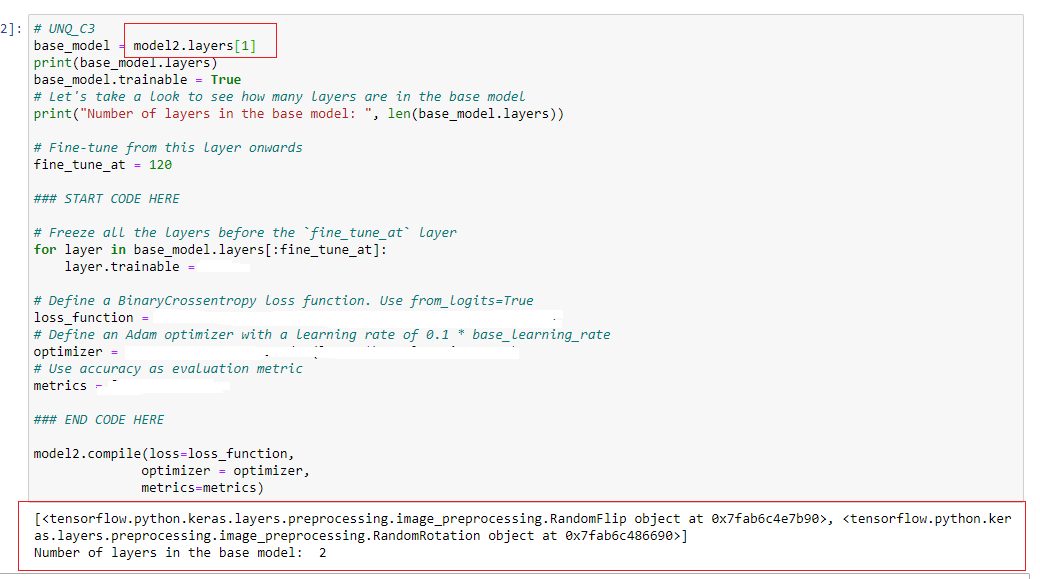

You can look back at the logic that you wrote to construct the alpaca_model and figure out what is happening. The “Sequential” layer that is index 1 on the list is the data augmentation output (a Sequential model with 2 layers). You can prove to yourself that the following two TensorFlowOp layers are created by the preprocess_input function by commenting that out in the alpaca_model logic and then printing the layers of the resultant model by commenting out the invocation of the comparator so that it doesn’t “throw”. So the reason that your original enumeration did not make sense is that it turns out that the function at index 2 returns two layers. That function is imported from Keras and here’s the docpage. You can see it scales the pixel values to be between -1 and 1, so it involves two steps: subtraction and then division.

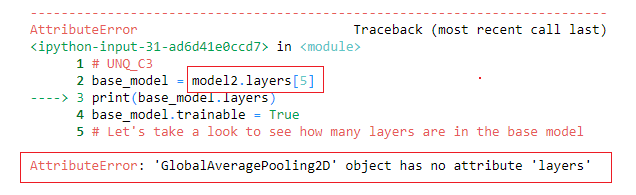

So, yes, 4 is a magic number. But it’s pretty clear why they set it to that value, right? They know the structure of what they created and they are selecting the included model.